Source – finextra.com

The financial services industry has witnessed considerable hype around Artificial Intelligence (AI) in recent months. We’re all seeing a slew of articles in the media, at conference keynote presentations and think-tanks tasked with leading the revolution. AI indeed appears to be the new gold rush for large organisations and FinTech companies alike. However, with little common understanding of what AI really entails, there is growing fear of missing the boat on a technology hailed as the ‘holy grail of the data age.’ Devising an AI strategy has therefore become a boardroom conundrum for many business leaders.

How did it come to this – especially since less than two decades back, most popular references of artificial intelligence were in sci-fi movies? Will AI revolutionise the world of financial services? And more specifically, what does it bring to the party with regards to fraud detection? Let’s separate fact from fiction and explore what lies beyond the inflated expectations.

Why now?

Many practical ideas involving AI have been developed since the late 90s and early 00s but we’re only now seeing a surge in implementation of AI-driven use-cases. There are two main drivers behind this: new data assets and increased computational power. As the industry embraced big data, the breadth and depth of data within financial institutions has grown exponentially, powered by low-cost and distributed systems such as Hadoop. Computing power is also heavily commoditised, evidenced by modern smartphones now as powerful as many legacy business servers. The time for AI has started, but it will certainly require a journey for organisations to reach operational maturity rather than being a binary switch.

Don’t run before you can walk

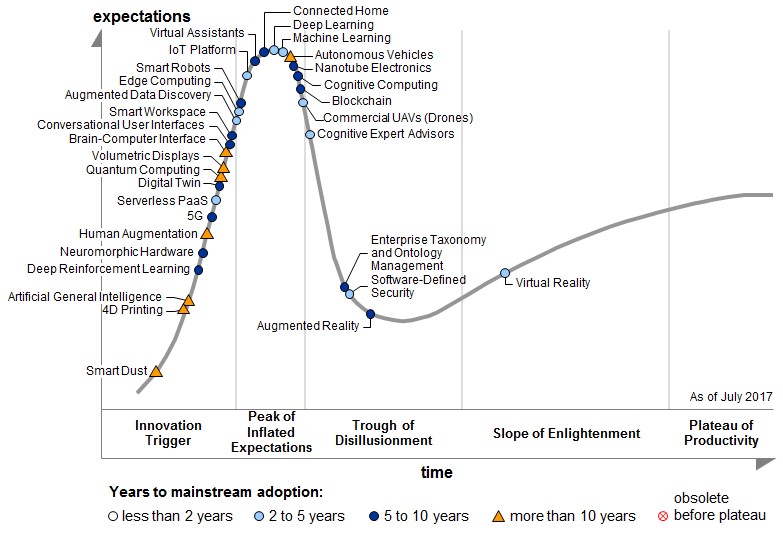

The Gartner Hype Cycle for Emerging Technologies (Figure 1 below) infers that there is a disconnect between the reality today and the vision for AI, an observation shared by many industry analysts. The research suggests that machine learning and deep learning could take between two-to-five years to meet market expectations, while artificial general intelligence (commonly referred to as strong AI, i.e. automation that could successfully perform any intellectual task in the same capacity as a human) could take up to 10 years for mainstream adoption.

Other publications predict that the pace could be much faster. The IDC FutureScape report suggests that “cognitive computing, artificial intelligence and machine learning will become the fastest growing segments of software development by the end of 2018; by 2021, 90% of organizations will be incorporating cognitive/AI and machine learning into new enterprise apps.”

AI adoption may still be in its infancy, but new implementations have gained significant momentum and early results show huge promise. For most financial organisations faced with rising fraud losses and the prohibitive costs linked to investigations, AI is increasingly positioned as a key technology to help automate instant fraud decisions, maximise the detection performance as well as streamlining alert volumes in the near future.

Data is the rocket fuel

Whilst AI certainly has the potential to add significant value in the detection of fraud, deploying a successful model is no simple feat. For every successful AI model, there are many more failed attempts than many would care to admit, and the root cause is often data. Data is the fuel for an operational risk engine: Poor input will lead to sub-optimal results, no matter how good the detection algorithms are. This means more noise in the fraud alerts with false positives as well as undetected cases.

On top of generic data concerns, there are additional, often overlooked factors which directly impact the effectiveness of data used for fraud management:

- Geographical variances in data.

- Varying risk appetites across products and channels.

- Accuracy of fraud classification (i.e. which proportion of the alerts marked as fraud are effectively confirmed ones).

- Relatively rare occurance of fraud compared to the huge bulk of transactions; having a suitable sample to train a model isn’t always guaranteed.

Ensuring that data meets minimum benchmarks is therefore critical, especially with ongoing digitalisation programmes which will subject banks to an avalanche of new data assets. These can certainly help augment fraud detection capabilities but need to be balanced with increased data protection and privacy regulations.

For instance, the General Data Protection Regulation (GDPR) comes in force in May 2018 across EU member states and utilising new data assets such as device, geo-location or behavioural insight derived from session data may not be possible, unless consented. This is a notable change from previous national legislations such as the UK’s Data Protection Act where the Section 29(1) exemption allowed for ‘personal data processed for specified purposes of crime prevention/detection, apprehension/prosecution of offenders or imposition of tax or similar duties’ without explicit consent.

A hybrid ecosystem for fraud detection

Techniques available under the banner of artificial intelligence such as machine learning, deep learning, etc. are powerful assets but all seasoned counter-fraud professionals know the adage: Don’t put all your eggs in one basket.

Relying solely on predictive analytics to guard against fraud would be a naïve decision. In the context of the PSD2 (Payments Services Directive) regulation across EU member states, a new payment channel is being introduced along with new payments actors and services, which will in turn drive new customer behaviour. Without historical data, predictive techniques such as AI will be starved of a valid training sample and therefore be rendered ineffective in the short term. Instead, the new risk factors can be mitigated through business scenarios and anomaly detection using peer group analysis, as part of a hybrid detection approach.

Yet another challenge is the ability to digest the output of some AI models into meaningful outcomes. Techniques such as neural networks or deep learning offer great accuracy and statistical fit but can also be opaque, delivering limited insight for interpretability and tuning. A “computer says no” response with no alternative workflows or complementary investigation tools creates friction in the transactional journey in cases of false positives, and may lead to customer attrition and reputational damage – a costly outcome in a digital era where customers can easily switch banks from the comfort of their homes.

Holistic view

For effective detection and deterrence, fraud strategists must gain a holistic view over their threat landscape. To achieve this, financial organisations should adopt multi-layered defences – but to ensure success, they need to aim for balance in their strategy. Balance between robust counter-fraud measures and positive customer experience. Balance between rigid internal controls and customer-centricity. And balance between curbing fraud losses and meeting revenue targets. Analytics is the fulcrum that can provide this necessary balance.

AI is a huge cog in the fraud operations machinery but one must not lose sight of the bigger picture. Real value lies in translating ‘artificial intelligence’ into ‘actionable intelligence’. In doing so, remember that your organisation does not need an AI strategy; instead let AI help drive your business strategy