Source: syncedreview.com

Reinforcement Learning (RL) agents seem to get smarter and more powerful every day. But while it is relatively easy to compare a given agent’s performance against other agents or human experts for example in video gameplay, it is more difficult to objectively evaluate RL agents’ robustness and their all-important ability to generalize to other environments.

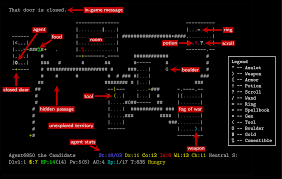

The NetHack Learning Environment (NLE) aims to solve this problem. Introduced by a team of researchers from Facebook AI, University of Oxford, New York University, Imperial College London, and University College London, NLE is a procedurally generated environment for testing the robustness and systematic generalization of RL agents. The name “NetHack” is taken from a popular, procedurally generated dungeon exploration role-playing video game that helped inspire the new environment.

Most of the recent advances in RL and Deep Reinforcement Learning have been driven by the development of novel simulation environments. However, the researchers found that while existing RL environments tend to be either sufficiently complex or based on fast simulation, they are rarely both.

NetHack, the researchers say, combines lightning-fast simulation with very complex game dynamics that are difficult even for humans to master — enabling RL agents to experience billions of steps in the environment in a reasonable time frame while still challenging the limits of what current methods can achieve. NetHack is thus sufficiently complex to drive long-term research on problems such as exploration, planning, skill acquisition and language-conditioned RL; while also capable of dramatically reducing the computational resources required to gather a large amount of experience.

The NetHack Learning Environment (NLE) is built on NetHack 3.6.6, the latest available version of the game, and is designed to provide a standard, turn-based RL interface around the standard NetHack terminal interface.

NetHack’s extremely large number of possible states and environment dynamics present interesting challenges for exploration methods. In order to master NetHack gameplay, even human players often need to consult external resources to identify critical strategies or discover new paths forward. This makes it more challenging for an agent to acquire required game skills such as collecting resources, fighting monsters, eating, manipulating objects, casting spells, taking care of their pets, etc.

The researchers say NLE can make it easy for researchers to probe the behaviour of their agents by defining new tasks with just a few lines of code. To demonstrate NetHack as a suitable testbed for advancing RL, the team released a set of initial tasks in the environment that include navigating to a staircase while being accompanied by a pet, locating and consuming edibles, collecting gold, scouting to discover unseen parts of the dungeon, and finding an oracle.

They also introduced baseline models trained using IMPALA and Random Network Distillation — a popular exploration bonus that enables agents to learn diverse policies for early stages of NetHack — and performed a qualitative analysis of their trained agents to demonstrate the benefit of NetHack’s symbolic observation space.

Currently built on NetHack 3.6.6, the researchers plan to base future NLE versions on NetHack 3.7 and beyond. The 3.7 version, once released, will add a new “Themed Rooms” feature to increase the variability of observations. Researchers will also introduce scripting in the Lua language to enable users to create custom sandbox tasks.