Source – sciencemag.org

You may know the art of the deal, but there’s a science to it, too. And artificial intelligence is beginning to learn it. Computers that could negotiate for us could automate and optimize everything from traffic intersections to global treaties. Last month, at the International Joint Conference on Artificial Intelligence (IJCAI) in Melbourne, Australia, a group of researchers presented a paper on the challenges and opportunities of such hagglebots. Science spoke with the paper’s lead author, Tim Baarslag, a computer scientist at Centrum Wiskunde & Informatica in Amsterdam. This interview has been edited for brevity and clarity.

Q: What can autonomous negotiators do today?

A: There are simple bots on eBay, for example “sniping agents” that bid at the last possible second. And there’s software that bids for ad placement online. Then there are negotiation support systems, software that assists humans by suggesting win-win outcomes. In a job negotiation, the system might suggest: “From the proposals received so far, it can be deduced that the salary level is very important for your boss, while you have previously indicated you value family life most of all. Perhaps asking instead for a work day at home could be in your mutual interest.” And then there are agents in our IJCAI competition that, for example, play the board game Diplomacy with each other: “If you help me invade this country then later I can help you invade that country.” But they can’t strike deals without knowing the user’s preferences. We still lack autonomous negotiators in the full sense of the word.

Q: What could fully autonomous negotiators do?

A: In principle, there are tons of applications. Humans often fail to reach the best agreements where computers can. On top of that, computer negotiation can be done very fast. Autonomous negotiators could be used in haggling at a marketplace, buying a house, setting a meeting, or solving political gridlock when parties need to negotiate a very complex deal. Think of the Paris climate deal, or simply coalition agreements for the next government.

Q: Why will such negotiation bots be necessary in the future?

A: An application I mention in the paper is the smart grid and community energy trading. You could say to you neighbor, “I’m going on holiday. You can use my solar-powered energy for the next week. Maybe later I could get something back.” These small negotiations would happen sometimes even every 15 minutes. Another application is the Internet of Things, a scenario in which our everyday objects will all be interconnected through the internet. Maybe some users would like to pay a little bit more to get more privacy or increased functionality. We ran an experiment in which smartphone users were allowed to haggle over apps’ access to their location, contacts, and photos in exchange for money. The ability to negotiate led to decisions more in line with their privacy preferences.

Q: What challenges do these bots face?

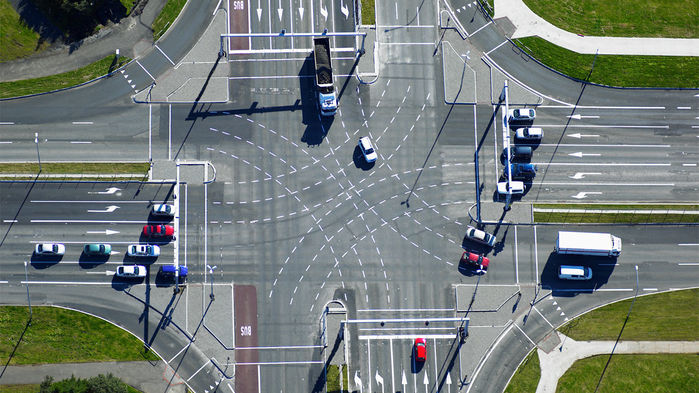

A: Apart from eliciting a user’s preferences on the fly, an agent has to understand the domain. In a job negotiation, you have to feed it information on what you can ask. If you do a house negotiation, you would have to engage with someone who knows about real estate—house prices, maybe even the psychology involved. Another challenge is long-term perspective, doing multiple negotiations with the same or different opponents. Think of autonomous vehicles constantly having to engage in quick negotiations about who gets priority. What agents will then need is some kind of reputation metrics. Another real-life challenge is inappropriate bias. For example, in 2000, Amazon experimented with price discrimination, meaning that different customers buying the very same item paid a different price. If you have different prices for different genders, races, and incomes, you might have a problem.

Q: How do we empower these bots while still trusting them?

A: This is a difficult point. You run the risk of opaque behavior, where the agent is just running around doing its own thing. We think it’s very important to have transparency about likely outcomes. Take Uber. In the app, even before you call, you will see a range of prices. Actually, what goes on behind the screen is a little negotiation with all the drivers, where the advertised price range assures the user of the likely outcome.

Q: Should we expect people to behave differently when represented by bots?

A: Human lawyers are sometimes actually ethically permitted or even expected by their clients to misrepresent what has happened. And in autonomous driving, people want cars to have certain ethical behaviors, but when it concerns their own car they easily sacrifice these ideals. We need to study much more carefully what this will lead to in the future.