Source: analyticsindiamag.com

Reinforcement learning has exceeded human-level performance when it comes to playing games. Games as a testbed have rich and challenging domains for testing reinforcement learning algorithms that start with a collection of games and well-known reinforcement learning implementations.

Reinforcement learning is beneficial when we need an agent to perform a specific task, but to be precise, there is no single “correct” method of accomplishing it. In a paper, researcher Kevin Chen showed that deep reinforcement learning is very efficient at learning how to operate the game Flappy Bird, despite the high-dimensional sensory input.

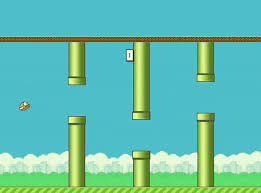

According to the researcher, the goal of this project is to get a policy to have an agent that can successfully play the bird game. Flappy Bird is a popular mobile game in which a player tries to keep the bird alive for as long as possible while the bird flaps and navigates through the pipes. The bird automatically falls towards the ground due to gravity, and if it hits the ground, it dies, and the game ends.

In order to score high, the player must keep the bird alive for as long as possible while navigating through obstacles — pipes. Also, training an agent to successfully play the game is especially challenging because the motive behind this task is to afford the agent with only pixel information and the score.

AI Playing Flappy Bird

The researcher did not provide any information about what the bird or pipes look like to the agent, and the agent must learn these representations and directly use the input and score to develop an optimal strategy.

The goal of reinforcement learning is always to maximise the expected value of the total payoff or the expected return. In this research, the agent used a Convolutional Neural Network (CNN) to evaluate the Q-function for a variant of Q-learning.

The approach utilised here is the deep Q-learning in which a neural network is used to approximate the Q-function. As mentioned, this neural network is a convolutional neural network which can also be called the Deep Q-Network (DQN).

According to the researcher, an issue that arises in traditional Q-learning is that the experiences from consecutive frames of the same episode, which means that a run from start to finish of a single game is very correlated. This, in result, hinders the training process and leads to inefficient training. To mitigate this issue and de-correlate the experiences, the researcher used the experience replay method for storing every experience in the replay memory of every frame.

Behind Deep Q-Network

The Q-function in this approach is approximated by a convolutional neural network, where this network takes as input an 84×84×historyLength image and has a single output for every possible action.

The first layer is a convolution layer with 32 filters of size 8×8 with stride 4, followed by a rectified nonlinearity. The second layer is also a convolution layer of 64 filters of size 4×4 with stride 2, followed by another rectified linear unit. The third convolution layer has 64 filters of size 3×3 with stride 1, followed by a rectified linear unit. Following these layers, the researcher achieved a fully connected layer with 512 outputs along with an output layer that is also fully connected with a single output for each action.

Wrapping Up

The metric for evaluating the performance of the DQN is the game score i.e. the number of pipes passed by the bird. According to the researcher, the trained Deep Q-Network played extremely well and even performed better than humans. In comparison to human players, the scores for human and DQN are both infinities for the easy and medium difficulties, while the DQN is better than a human player because it does not have to take a break and can play for 10+ hours at a stretch.