Source – nextplatform.com

One of the most common misconceptions about machine learning is that success is solely due to its dynamic algorithms. In reality, the learning potential of those algorithms and their models are driven by the data preparation, staging and delivery. When suitably fed, machine learning algorithms work wonders. Their success, however, is ultimately rooted in the data logistics.

Data logistics are integral to how sufficient training data is accessed. They determine how easily new models are deployed. They specify how changes in data content can be isolated to compare models. And, they facilitate how multiple models are effectively used as part of a specific use case.

Effective organizations are able to overcome multiple obstacles to successful data preparation, minimizing prep time while reusing that data across models and applications. The data landscape has become increasingly distributed, making it difficult to pool resources for any purpose. With data scattered about on premises, in the cloud (both public and private), and at the edge in Internet of Things applications, these processes often become fragmented, further complicating analytics.

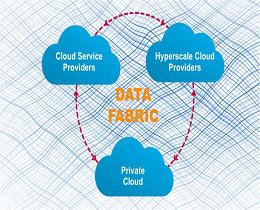

The solution to distributed data sources and the inordinate time devoted to less-than-effective machine learning processes is deploying a global data fabric. By extending a data fabric to manage, secure and distribute data – within and outside the enterprise – organizations have controlled access to a sustainable solution enabling pivotal machine learning advantages.

A data fabric allows organizations to handle the ever increasing volume of data and at the same time meet SLAs that are made much more difficult with scale. For example, with scale it is difficult to support the velocity and agility required for most low latency applications supported by machine learning. Similarly, reliability is extremely important as these applications move from historical, analytically descriptive, to deeply integrated aspects of mission critical applications. And finally, while a uniform fabric simplifies the management and control of diverse data sets, it can make it more difficult for users and applications to quickly access the required data. A fabric that encompasses global messaging with an integrated publish and subscribe framework supports the real-time delivery and access of data to enable real-time intelligent operations at scale.

There are four key requirements for implementing an end-to-end fabric that streamlines the logistics necessary for organizations to reap the rewards of machine learning innovations. These include extending the fabric to multiple locations, optimizing data science, accounting for data in motion, and centralizing data governance and security.

Number 1: Multiple Locations, All Data

An enterprise data fabric connects all data across multiple locations at all times. It delivers a uniformity of access to the actual data for operations and analytics regardless of the amount of distributed data.

That said, there are several competing definitions of the term data fabric that can cause confusion. ETL vendors offer integration and data federation tools that define the data flows from sources and destinations as “fabrics.” Storage vendors market “fabrics” that extend traditional storage networks. Virtualization vendors extol data fabric solutions, but their abstraction layers simply conceal the complexity of access without addressing the underlying issues to simplify and speed access at scale.

A true global fabric simplifies all data management aspects across the enterprise. These solutions optimize machine learning logistics by simplifying the data management access, and analysis regardless of whether data is on-premise, in the cloud or at the edge.

Workflows and their data are easily moved from on-premise to cloud for performance, cost or compliance reasons. Resources can seamlessly shift between the cloud and on premises because they’re part of the same architecture. The fabric simply expands to all locations including the edge.

The overarching fabric’s agility, in conjunction with its ability to scale between multiple locations at modest prices, optimizes data flow within the enterprise. The result is the distribution of data processing and intelligence for real-time responses in decentralized settings. For example, oil companies can implement deep learning predictive maintenance on drilling equipment to eliminate downtime and maximize production. Which brings us to our second requirement.

Number 2: Integrating Analytics

A data fabric needs to support machine learning across locations and support agility and rapid deployment. A global data fabric’s primary objective is to maximize the speed and agility of data-driven processes, which is most noticeable in the acceleration of the impact of data science. Data fabrics achieve this advantage in two ways: by equipping data scientists with the means of improving their productivity, and by integrating data science within enterprise processes.

Typically, these professionals dedicate up to 80 percent of their time on data acquisition and data engineering – the logistics of machine learning. By simplifying data operations, data fabrics multiply the productivity of scarce data scientists five to ten-fold.

A critical best practice for machine learning logistics is to create a stream of noteworthy event data for specific business cases. These data sets become influential for building machine learning models, which require curating data for testing, sampling, exposing, recalibrating, and deploying those models.

These data streams support the flexible building and deploying of machine learning models. Users can separate the stream into topics that are most relevant to applications and their business objectives. Since data streams are persistent, they can be accessed by new models that can subscribe to the beginning of an event stream and data scientists can compare results from the new models to the original with the exact same event data. These distinctions are critical for fine-tuning new models to optimize business results, while enabling scientists to test models without disrupting production. Models are easily incorporated into production without downtime; one simply adds a new subscriber to the stream.

Data scientists can test new models while using the output of their initial model, and then switch to the output of a new model, aggregate results, or even split traffic between them if desired. The efficiency between this approach and the conventional one – in which scientists have to look for representative data samples, disrupt production to do so, test them, and so forth – is dramatic, as is the overall speed involved.

The data involved in optimizing machine learning logistics is one of the vital characteristics of global data fabrics: the same data supports an array of use cases. Self-describing formats such as JSON, Parquet, and Avro enable schema on read for the flexibility required to support multiple use cases. This is in stark contrast to relational settings in which employing new data sources or changing business requirements requires constantly recalibrating schema.

This flexibility, speed and agility is key to successfully integrating analytics into operations is at the foundation of next applications that increase revenue, efficiency and the ability to manage risk.

Number 3: Data In Motion

Many edge computing applications or social media analytics can’t wait until data are in repositories. A data fabric must embrace data in motion, and support access and analysis for data in motion and data at rest.

Event streams that can handle large scale data for long periods of time drive efficiency. Incorporating event streams and the ability to process all data into a single fabric allows the enterprise to apply the same rigor to data regardless of location, state, or application.

Self-describing data models are swiftly becoming the de facto data interchange format for any number of real-time use cases, from connected cars to wearable devices. Although global fabrics support traditional modeling conventions as well, the quick response times and flexibility of schema-on-demand options makes them more utilitarian for most applications. Particularly those applications that need to perform with high performance and deal with changing data sets.

The need to analyze data in motion and automate responses is required for many operational use cases across industries. In financial services, fraud detection and security use cases require diverse data feeds including video. Increasingly, edge processing for data in transit must be rapidly analyzed to detect and prevent suspicious behavior.

By equipping organizations to manage data at rest and in motion in the same manner, global fabrics meet the speed requirements of digital transformation in real-time for low latency analytics and responses.

An inclusive data fabric strengthens these use cases and others because data are part of the same tapestry at all times – security, governance, and their administration – remains whether data’s in transit or not.

Number 4: Central Governance And Security

Fabrics improve these aspects of data management by making their administration easier while strengthening their protection. Because data are part of a single architecture, there are fewer gaps to fortify.

One important element is access control. When access needs to be limited by a combination of department, function, security level, etc. the number of access control lists multiply to such an extent that they are hard to manage and actually become less secure. One interesting development is the creation of Access Control Expressions that combine Boolean logic with access control lists. So for example, you could create one simple expression to limit access to only Marketing Directors and above with at least a security clearance of level 2.

Governance and security extends across the fabric to the edge and the same access control are enforced regardless of where the data travels. Security and governance are equally applicable whether clusters expand from 100 to 1,000 nodes or more with encryption for data in motion and at rest.

Centralizing governance and security measures in comprehensive data fabrics effectively expands them alongside the fabric. The uniformity of administration simplifies this process while enhancing data management efficiency.

Machine Learning Success

To successfully harness AI and machine learning, organizations need to focus on data logistics and the four requirements for a data fabric:

- Deploy a data fabric that can stretch across all locations and embrace all the data needed to process and analyze

- Integrate analytics directly into operations through a data fabric

- Support data in motion and data at rest

- Include centralized governance and security