Source – electronicdesign.com

Almost two years ago, Google disclosed that it had built a slab of custom silicon called the tensor processing unit to improve its StreetView software’s reading of street signs, the accuracy of its search engine algorithm, and the machine learning methods that it uses in dozens of other internet services.

But the company never planned to keep its custom accelerator on the backend indefinitely. The goal had always been to hand the keys of the tensor processing unit – more commonly called the TPU – to software engineers via the cloud. On Monday, Google finally started offering its TPU chips to other companies, putting the custom silicon in more hands and its performance in front of more eyes.

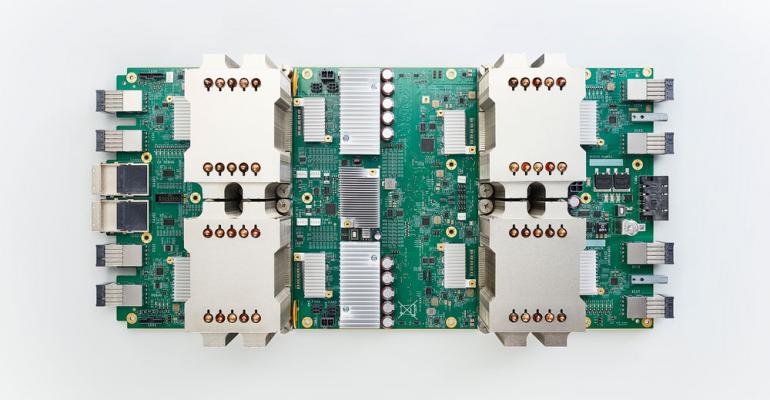

The tensor processing unit is equipped with four custom chips connected together to provide 180 trillion operations per second for machine learning workloads. With all that computing power, software engineers can train neural networks used in machine learning in hours rather than days, and without having to build a private computing cluster, according to Google.

“Traditionally, writing programs for custom ASICs and supercomputers has required deeply specialized expertise,” wrote John Barrus, Google Cloud’s product manager for Cloud TPUs, and Zak Stone, product manager for TensorFlow and Cloud TPUs inside the Google Brain team, in a blog post.

To lower the bar for programming, Google is offering a set of software tools based on TensorFlow, a software framework for machine learning developed by Google. The company said it would make models available for object detection, image classification and language translation as a reference for customers. Google said that it would charge $6.50 per TPU per hour for those participating in the beta.

Google’s goal is to bait software engineers into using its cloud computing platform over Amazon’s and Microsoft’s clouds. But in the process, Google is starting to compete with other chip suppliers, particularly Nvidia, which is spending billions of dollars on chips festooned with massive amounts of memory and faster interconnects to hold its lead over the market for machine learning.

Moving its custom chips into the cloud also puts Google into a collision course with semiconductor startups targeting machine learning, including Groq, founded by the former Google engineers that worked on the first TPU. Other companies close to releasing chips, like Graphcore and Wave Computing, could be hurt by Google’s bid for vertical integration, especially if other internet giants follow its lead.

Google said that the first customers to use the custom hardware include Lyft, which is working on autonomous vehicles that can be deployed in its ride sharing network, and which raised $1 billion in a financing round last year led by Google’s parent Alphabet. Another early user is Two Sigma, an investment management firm, wrote Barrus and Stone in the blog post.

There are a few questions we do not have answers to yet. It is not clear how much of Google’s internal training and inferencing runs on its custom chips, which since last year support both phases of machine learning. And Google is still facing the question of whether it can convince cloud customers to stop using Nvidia’s graphics processors for training and Intel’s central processing units for inferencing.

Last year, Google pulled the curtain off the performance of its first generation chip, which was only optimized for the inferencing phase of machine learning. It claimed that the accelerator ran thirteen times faster than chips based on Nvidia’s Kepler architecture. We point that out because Kepler is now two full generations behind Nvidia’s Volta architecture, which is built around custom cores that thrive on the intensive number-crunching involved in machine learning.

Nvidia estimates that it spent $3 billion building Volta. Its latest line of chips based on the architecture can deliver 125 trillion floating point operations per second for training and running neural networks. The chips can be packaged into miniature supercomputers that can be slid into a standard server or taken to oil rigs or construction sites to train algorithms locally instead of in a public cloud.

Google is not going to stop buying the latest chips for its cloud. The company said it would bolster its cloud with Nvidia’s Tesla V100, which are actually more flexible than Google’s chips because they support a wider range of software libraries, including TensorFlow. It will also offer customers chips based on Intel’s Skylake architecture, which has been beefed up for machine learning.

Later this year, Google plans to hand customers the keys to supercomputers called TPU pods, which link together 64 custom chips to provide up to 11.5 petaflops of performance. In December, Google said that a single pod could train ResNet-50, a program for image classification used as a benchmark for machine learning chips, in less than a half hour – much faster than previous methods.