Source: nojitter.com

For all the time we spend trying to get good outcomes from a tech project, is it possible that we’re not entirely clear about what “good outcomes” are? Why do so many cloud applications fail to meet their business case? Why do so many network standards fail to change the network? If we completely understood what the right outcome was, why don’t all the projects achieve it? The core of the problem, I think, lies in the combination of complexity and visualization.

My first programming job involved several application assignments. I think my second one was to write a program to edit the data on insurance applications before it went to the update process. The senior programmer was writing the updated piece. And since the insurance was a group policy for dentists, he understandably assumed that the “dentist” applicant would be at least 18-years-old, then wrote that assumption into the program. I didn’t edit for that, and so an error in data entry gave us an exceptionally youthful dentist, which crashed the update program. I fixed the edit, and there was no more crash.

Years later, I was called in by a major healthcare company when their entire network crashed, taking down half a state worth of hospitals and clinics. The problem was that a new network technology (IP routing) had been introduced, and routers can queue data where point-to-point fixed connections can’t. The software was written to assume no long delay in message transport was possible, and when it happened, the endpoints of the protocol got out-of-sync, and everything went down. The solution was to drop the messages if there was congestion, not queue them.

I wonder how many of our failures in IT and networking are related to a lack of foresight. We design networks, write code, and we still don’t address all the possible conditions. When something we didn’t foresee comes along, it falls into whatever bucket is the default choice, and that often breaks everything. We seem to get this sort of thing more often these days, don’t we?

The reason is complexity. IT and networking are getting better doing more things, doing things faster, because it’s getting more complex and capable. That complexity growth is threatening to create major problems because as you increase the number of elements in an application or service, as well as the level of interactivity among them, you increase the complexity in one of those awful goes-vertical-to-infinity curves.

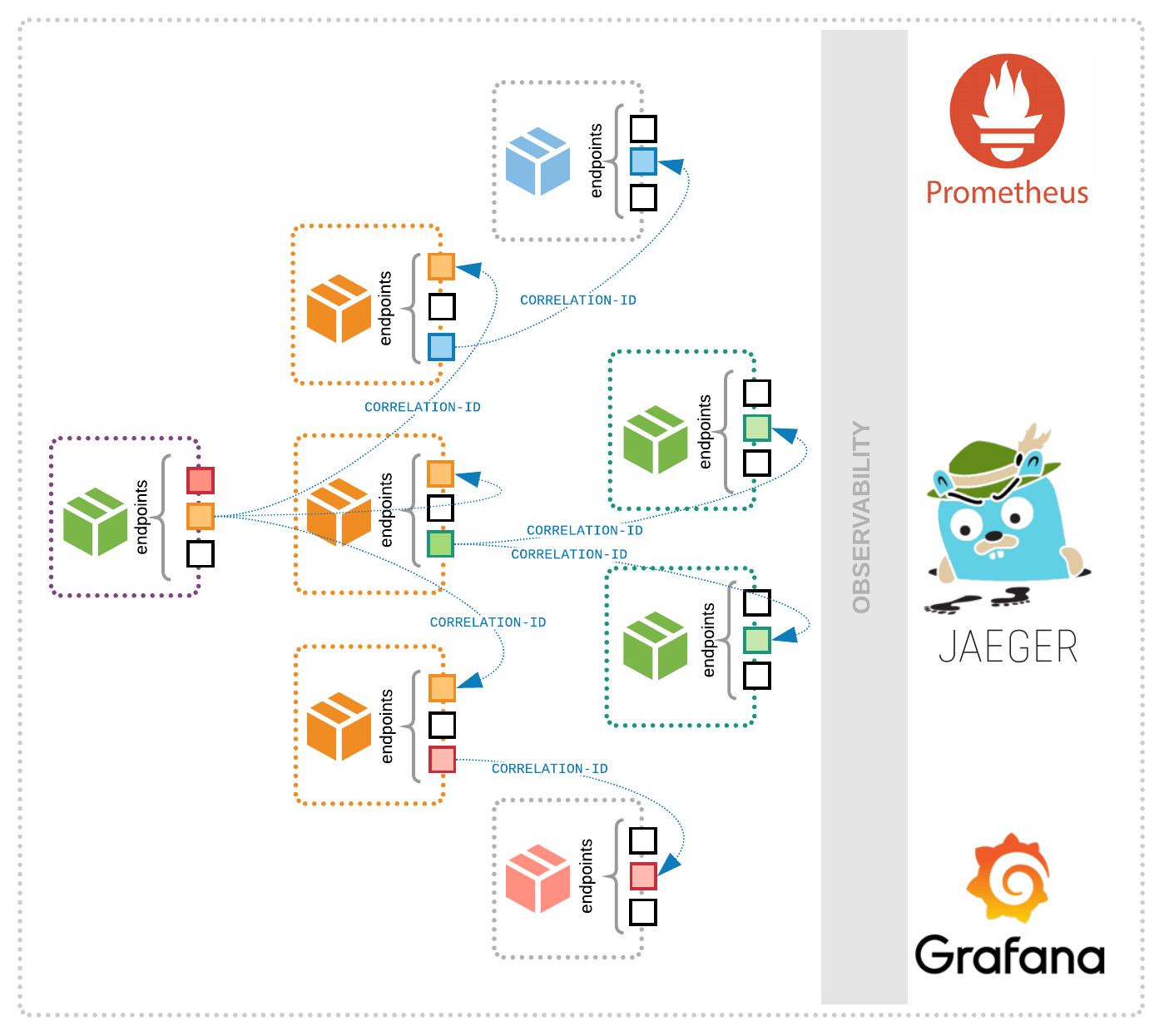

We used to write applications as monoliths and build networks with “core” and “edge.” Now applications are vast floating collections of functionality spinning around in a cloud-hosted mesh, and just getting everything in place or routing work to the right component is as complicated as an entire application used to be. And we’ve not even gotten to the part where work actually gets done, or services deliver profits to providers.

The network operators and the cloud providers have handled this differently. Network operators have generally visualized their applications as humans, and their networks as boxes. Cloud providers have separated functionality from implementation, and I think that there’s a growing gulf between the two approaches. Now, cloud providers want to host network functions for things like 5G, and doesn’t this mean we somehow have to cross that gulf?

Look at a network operations application diagram, and you can almost translate it into a bunch of desks with in-and out-box links and people scurrying around moving paper between boxes. It’s a monolith. They visualize networks in a very similar way, as a collection of devices. The problem is that operators now realize that neither approach works. They’re trying to make network functions virtualization “cloud-native,” and they’re trying to virtualize routing functionality…so they say. All their attempts have ended up creating monoliths, or boxes with different labels on them.

The cloud people aren’t blameless here either. I’ve seen designs for microservice applications that would require five minutes to process a transaction whose quality of experience (QoE) requirements wouldn’t be met if response times were more than five seconds. The total overhead in getting a transaction through all the microservices was greater than the processing time.

The fact is that we think that a good tech approach is identifying a radical new technology. We hear a lot about 5G, but not much realism on what exactly it’s going to do for us. How did we get to a next-generation technology goal without having any specific benefits to drive us there? How do we bridge the gap between operator and cloud, between monolith and microservice, when we don’t seem to understand the basic concept of constraints and benefits?

Boxes and monoliths are comfortable because they’re identities, single things. We’re single things ourselves, so we can intuitively manipulate other things. But functionally divided microservices, distributed behavior that adds up to routing a packet? How the heck do we visualize these things, and if we can’t, how do we know whether we’re using them correctly, or even making them work?

We need to start thinking differently about how functionality is combined to create applications and network services. Somebody might have to teach us. So far most of the tutorial material out there is down in the muck of implementation detail. Not at the conceptual level where all projects either fail or succeed, because up there is where “good” and “bad” end up getting defined.

The cloud works on functions and experiences, not boxes or monoliths, but humans will always think about themselves, and so the cloud has to somehow assemble its array of elastic stuff into something that looks like …us.