Source – phys.org

Physicists have applied the ability of machine learning algorithms to learn from experience to one of the biggest challenges currently facing quantum computing: quantum error correction, which is used to design noise-tolerant quantum computing protocols. In a new study, they have demonstrated that a type of neural network called a Boltzmann machine can be trained to model the errors in a quantum computing protocol and then devise and implement the best method for correcting the errors.

The physicists, Giacomo Torlai and Roger G. Melko at the University of Waterloo and the Perimeter Institute for Theoretical Physics, have published a paper on the new machine learning algorithm in a recent issue of Physical Review Letters.

“The idea behind neural decoding is to circumvent the process of constructing a decoding algorithm for a specific code realization (given some approximations on the noise), and let a neural network learn how to perform the recovery directly from raw data, obtained by simple measurements on the code,” Torlai told Phys.org. “With the recent advances in quantum technologies and a wave of quantum devices becoming available in the near term, neural decoders will be able to accommodate the different architectures, as well as different noise sources.”

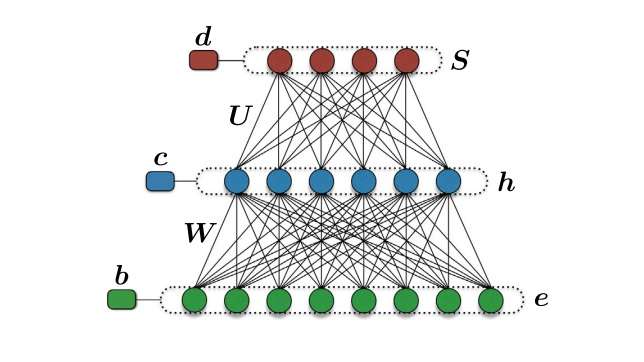

As the researchers explain, a Boltzmann machine is one of the simplest kinds of stochastic artificial neural networks, and it can be used to analyze a wide variety of data. Neural networks typically extract features and patterns from raw data, which in this case is a data set containing the possible errors that can afflict quantum states.

Once the new algorithm, which the physicists call a neural decoder, is trained on this data, it is able to construct an accurate model of the probability distribution of the errors. With this information, the neural decoder can generate the appropriate error chains that can then be used to recover the correct quantum states.

The researchers tested the neural decoder on quantum topological codes that are commonly used in quantum computing, and demonstrated that the algorithm is relatively simple to implement. Another advantage of the new algorithm is that it does not depend on the specific geometry, structure, or dimension of the data, which allows it to be generalized to a wide variety of problems.

In the future, the physicists plan to explore different ways to improve the algorithm’s performance, such as by stacking multiple Boltzmann machines on top of one another to build a network with a deeper structure. The researchers also plan to apply the neural decoder to more complex, realistic codes.

“So far, neural decoders have been tested on simple codes typically used for benchmarks,” Torlai said. “A first direction would be to perform error correction on codes for which an efficient decoder is yet to be found, for instance Low Density Parity Check codes. On the long term I believe neural decoding will play an important role when dealing with larger quantum systems (hundreds of qubits). The ability to compress high-dimensional objects into low-dimensional representations, from which stems the success of machine learning, will allow to faithfully capture the complex distribution relating the errors arising in the system with the measurements outcomes.”