Source: analyticsinsight.net

The Fourth Industrial Revolution (Industry 4.0) has become a framework related challenge for scientific researchers. Industry 4.0 is principally portrayed by evolution and convergence of nano-, bio-, information and cognitive technologies to upgrade great transformations in economic, social, cultural and humanitarian spheres. Experts managing advancement and introduction of the sixth technological paradigm technologies decide by and large whether our nation can ride the influx of Industry 4.0 developments.

For as long as 25 years, the creators have been building up the concept of systematic computer simulation training at schools and educators’ training colleges. The idea thoughts have been summed up and introduced in the course reading. Spreadsheets are picked to be the leading environment for computer simulation training, their application examined in articles. Utilizing spreadsheet processors (autonomous, integrated and cloud-based) as specific illustrations, the writers exhibit segments of teaching innovation of computer simulation of determined and stochastic articles and cycles of different nature. The methodical training of simulation provides changing and integrating simulation conditions running from general (spreadsheets) to particular subject-based ones.

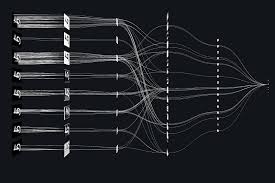

What spreadsheets can’t do is think. That is the safeguard of more up to date, more remarkable sorts of software called neural networks, complex artificial intelligence programs intended to emulate the computational cycles of the human mind. Furthermore, for reasons unique to the improvement of neural networks as of late, pictures, instead of so-called structured data, columns and rows of text and numbers, for instance—have been the distraction of top A.I. experts. As such, incredible PCs can filter through a large number of photographs of dogs to comprehend minute dog qualities. Yet, similar software struggles to intuit fields in a humble spreadsheet.

This has been profoundly disappointing to data scientists in fields like medical research, finance, and operations, where structured data is the coin of the domain. The issue, specialists state, is one of emphasis as well as abilities. “Most of the data we manage is structured, or we have forced some sort of structure on it,” says Bayan Bruss, a machine learning researcher at the Capital One. “There’s this huge gap between the advances in deep learning and the information that we have. A ton of what we do is attempt to close that gap.”

At biotech powerhouse Genentech, for instance, data scientists as of late went through months assembling a spreadsheet with the health records and genomic information of 55,000 cancer patients. The fields contain chunks, for example, age, cholesterol levels, and pulses, as well as more advanced credits like molecular profiles and genetic abnormalities. Genentech will probably feed this data into a neural network that can plan a patient’s health attributes. The sought after result is an advancement drug that is conceivably novel to every patient.

For all the energy behind utilizing deep learning on structured data, obstacles remain. For one, the thought is new to such an extent that there’s no reliable method to assess how great these procedures are compared to more conventional statistical methods. “It’s somewhat of an open question at the present time,” says Even Oldridge, a data scientist for Nvidia, which makes chips that power A.I. programming.

To be sure, given the cost of training neural networks, older data analytics methods might be adequate for organizations that don’t have the privilege A.I. expertise in-house. “I’m a firm adherent that for each organization, there isn’t a magic solution that can take care of each issue,” says A.I. expert Kanioura, the PepsiCo leader. This is in truth behind the pitch that cloud-services giants Amazon, Microsoft, and Google make: Buy A.I. services from us instead of making huge expenditures on ability for possibly incremental returns.

What’s more, similarly as with any project where people intend to show computers how to “think,” the predispositions of the living life forms undermine the project. Deep learning frameworks are just on a par with the data they are trained on, and to an extreme or excessively little of a specific data point can skew the software’s predictions. Genentech’s data set, for example, has clinical information on cancer patients going back 15 years. Notwithstanding, the genomic testing data it utilizes in its spreadsheet is eight years old, implying that patient data from before then isn’t as comparable as scientists would like. “If we don’t comprehend these data sets, we could create models that are absolutely inconsistent,” says Genentech’s Copping.

In any case, the expected benefit of supercharging the analysis of each one of those spreadsheet fields is nothing less than being able “anticipate how long a patient can survive” with a specific treatment, says Copping. Not awful for a lot of rows and columns.