What is Kubernetes?

Kubernetes, often abbreviated as K8s, is an open-source system designed for automating the deployment, scaling, and management of containerized applications. It groups individual containers, which are self-contained units of software, into logical units called pods for easier management and discovery.

Developed by Google and now maintained by a global community, Kubernetes leverages containerization technology to ensure efficient and reliable operation of applications across multiple servers or cloud environments.

What is top use cases of Kubernetes?

Some of the top use cases of Kubernetes include:

- Container Orchestration: Kubernetes can manage a large number of containers efficiently, ensuring that they run where and when needed.

- Scalability: Kubernetes allows for seamless scaling of applications to meet changing demands, ensuring optimal performance.

- High Availability: Kubernetes ensures that applications are highly available by automatically restarting containers in case of failures.

- DevOps Integration: Kubernetes plays a crucial role in DevOps practices, enabling automation and continuous delivery of applications.

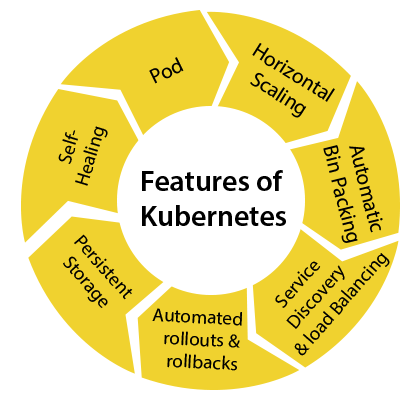

What are feature of Kubernetes?

Features of Kubernetes:

- Automated deployments and rollbacks: Kubernetes automates the process of deploying new versions of applications and rolling back to previous versions if necessary.

- Service discovery and load balancing: It automatically discovers and balances traffic across different instances of an application, ensuring high availability and performance.

- Self-healing capabilities: Kubernetes can automatically restart failed containers and reschedule them on different machines, ensuring application resilience.

- Storage orchestration: It manages storage resources and enables persistent storage for containerized applications.

- Security: Kubernetes provides various security features, including role-based access control and network policy enforcement.

What is the workflow of Kubernetes?

Workflow of Kubernetes:

- Define the Application: Create a Kubernetes manifest file (YAML or JSON) defining the desired state of the application, including containers, services, volumes, and other resources.

- Deploy the Application: Use the kubectl command-line tool or Kubernetes API to deploy the application manifest to the Kubernetes cluster.

- Monitor and Manage: Monitor the application’s performance and health using built-in Kubernetes monitoring tools and manage the application lifecycle as needed.

- Scale and Update: Scale the application horizontally or vertically based on demand, and perform updates or rollbacks using Kubernetes deployment strategies.

- Troubleshoot and Debug: Diagnose and troubleshoot any issues that arise during the application lifecycle using Kubernetes logging, monitoring, and debugging tools.

How Kubernetes Works & Architecture?

Kubernetes is a highly flexible container management system designed to manage containerized applications across multiple environments. It was initially developed by Google as a successor to their internal Borg system and is now maintained by the Cloud Native Computing Foundation. Kubernetes operates at the cluster level, managing the master and worker nodes as a whole, allowing for the orchestration of containerized applications in a distributed manner.

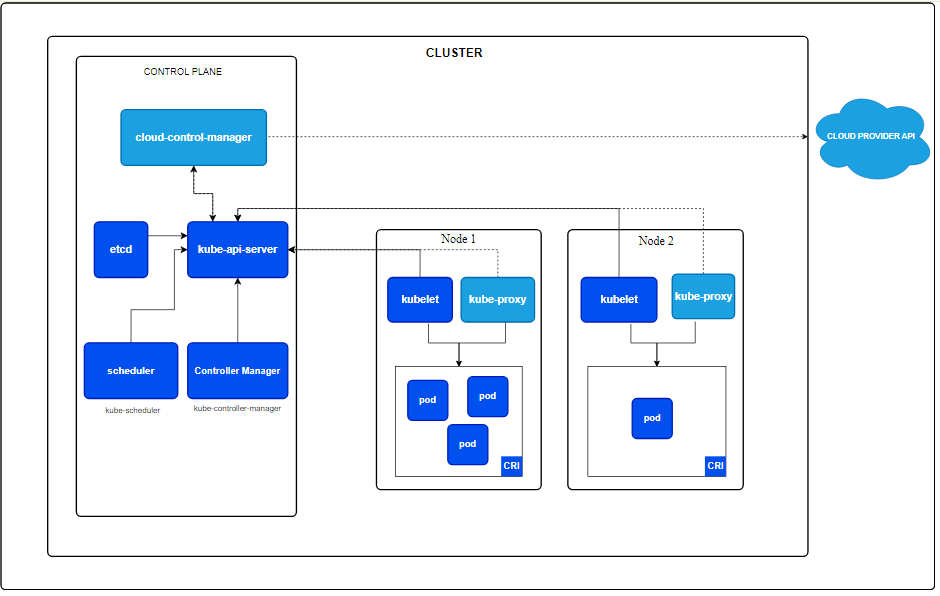

The architecture of Kubernetes is divided into two main components: the control plane and the worker nodes.

Control Plane

The control plane consists of several components that manage the cluster:

- kube-apiserver: This is the central management component of Kubernetes, exposing the Kubernetes API and serving as the frontend for the control plane. It processes REST operations, validates them, and updates the corresponding objects in etcd.

- etcd: A highly available key-value store used as Kubernetes’ backing store for all cluster data. It ensures data consistency and reliability across the control plane components.

- kube-scheduler: Responsible for distributing workloads across the nodes based on resource requirements, policies, and affinity specifications.

- kube-controller-manager: Manages the various controllers that regulate the state of the cluster, such as the replication controller ensuring the correct number of pods for each replicated pod.

- cloud-controller-manager: If the cluster is partly or entirely cloud-based, this component links the cluster to the cloud provider’s API, running only those controls specific to the cloud provider.

Worker Nodes

Worker nodes are the machines that run the containerized applications. Each node includes:

- kubelet: An agent that ensures containers are running in a pod and communicates with the control plane.

- kube-proxy: A network proxy that maintains network rules on nodes, allowing network communication to pods from network sessions inside or outside the cluster.

- Container runtime: Software responsible for running the containerized applications. Kubernetes supports any runtime that adheres to the Kubernetes CRI (Container Runtime Interface).

Pods

Pods are the smallest deployable units of computing that can be created and managed in Kubernetes. A pod can contain one or more containers, with shared storage/network resources, and a specification for how to run the containers. Pods are ephemeral and can be replaced by the Kubernetes system, making them ideal for stateless applications.

Networking

Kubernetes uses a plugin-based networking model called Container Network Interface (CNI) to enable networking between pods spread across the same or different nodes. CNI plugins allow for the standardization of container networking across different container orchestration tools.

Best Practices

For architecting Kubernetes clusters, it’s important to:

- Keep the Kubernetes version updated.

- Invest in training for development and operations teams.

- Establish governance across the enterprise.

- Enhance security with image-scanning processes and adopt role-based access control (RBAC).

- Use livenessProbe and readinessProbe to manage pod lifecycles.

Kubernetes’ architecture is designed to be flexible and scalable, allowing for nearly infinite flexibility and the ability for applications to scale out virtually instantaneously to meet changing needs. This makes Kubernetes a powerful tool for managing containerized applications across various environments, from on-premises to cloud-based setups.