Source: pubs.spe.org

A real-time deep-learning model is proposed to classify the volume of cuttings from a shale shaker on an offshore drilling rig by analyzing the real-time monitoring video stream. As opposed to the traditional, time-consuming video-analytics method, the proposed model can implement a real-time classification and achieve remarkable accuracy. The approach is composed of three modules. Compared with results manually labeled by engineers, the model can achieve highly accurate results in real time without dropping frames.

Introduction

A complete work flow already exists to guide the maintenance and cleaning of the borehole for many oil and gas companies. A well-formulated work flow helps support well integrity and reduce drilling risks and costs. One traditional method needs human observation of cuttings at the shale shaker and a hydraulic and torque-and-drag model; the operation includes a number of cleanup cycles. This continuous manual monitoring of the cuttings volume at the shale shaker becomes the bottleneck of the traditional work flow and is unable to provide a consistent evaluation of the hole-cleaning condition because the human labor cannot be available consistently, and the torque-and-drag operation is discrete, containing a break between two cycles.

Most of the previous work used image-analysis techniques to perform quantitative analyses on the cuttings volume. The traditional image-processing approach requires significant work on feature engineering. Because the raw data are usually noisy with missing components, preprocessing and augmenting the data play an important role in making the learning model more efficient and productive. The deep-learning framework, on the other hand, automatically discovers the representations needed for feature detection or classification from raw data. It can help overcome the difficulties in setting up and monitoring devices in a harsh environment, and the data-acquisition requirement for a cuttings-volume-monitoring system at the offshore rig might be relaxed.

The objective of this study is to verify the feasibility of building a real-time, automatic cuttings-volume-monitoring system on a remote site with a limited data-transmission bandwidth. The minimum data-acquisition hardware requirement includes the following:

- Single uncalibrated charged-coupled-device camera

- Inconsistent lighting sources

- Low-bit-rate transmission

- Image-processing unit without graphics-processing-unit support (e.g., a laptop)

A deep neural network (DNN) is adopted to perform the image processing and classification on cuttings volumes from a shale shaker at a remote rig site. Specifically, the convolutional neural networks are implemented as feature extractors and classifiers in the described model. The main contributions of this study can be summarized as follows:

- A deep-learning framework that can classify the volume of cuttings in real time

- A real-time video analysis system that requires minimum hardware setup efforts, capable of processing low-resolution images

- An object-detection work flow to detect automatically the region covered by cuttings

- A multithread video encoder/decoder implemented to improve real-time video-streaming processing

Overview of the Real-Time Cuttings-Volume Monitoring System

The work flow mainly consists of the following child processes: real-time video processing (decoding and encoding), region of interest (ROI) proposal, and the data preprocessing and deep-learning classification. During the drilling process, cuttings with mud are transported through the vibrating shale shaker. An intelligent video-processing engine has been developed by the authors to analyze videos captured when the cuttings are transported to the shaker. The analysis results will be transported and presented on a monitor in the office in real time, which is convenient for the drilling engineer to obtain the information of the cuttings volume promptly. The continuous and real-time inference (classification) results can be used as the histogram for further analysis.

The real-time video-processing module is designed for adapting to the dynamic drilling environment. Monitoring the cuttings volume at the shale shaker in real time is an important approach to overall drilling-risk management.

Methodologies

Video Frame Extraction. A two-threads mechanism is used for reading and writing the source stream in real time. The decoding process should be conducted in an adaptive manner because the server is pushing the video stream continuously. If the decoding process fails to catch up with the speed of the video stream, a chance exists that the synchronization and drop frames could be lost. To overcome this obstacle, a fast thread-safe circular buffer is implemented.

ROI Proposal. To guarantee steady inference results, the users (engineers or developers) need to provide the ROI to indicate the area in which cuttings are flowing on the shaker. The described learning model will pay attention to this ROI and obtain input data with much less variety. The camera will not change its position or angle after the ROI is settled. The ROI will filter out many noises interfering with the classifier. A manual or an automatic approach can be used to facilitate ROI selection. Before the decoding of the video stream begins, the interactive graphical user interface (GUI) will present one frame to the user indicating the position of the shaker. The user can highlight the ROI simply by selecting four corner points from the first frame demonstrated by the GUI.

However, manual region selection requires repeated labor. For a certain shaker, the camera angle might be changed slightly during the drilling operation purposely or accidentally by the workers. For different shakers, the preset camera angle might be different.

To automate this procedure, a faster region-based convolutional-neural-network (faster R-CNN) ROI detection method can detect the region that contains the cuttings flow. The raw video frame is used as input and is labeled manually with the ROI by using a bounding box. Every raw frame is fed into a feature extractor, which produces a feature map. The feature map is fed into a much smaller convolutional neural network that takes the feature map as inputs and outputs in the region proposals. Those proposals are fed into a classifier that classifies those proposals to the background class or ROI class. If a region proposal is classified into the ROI class, its coordinate, width, and height will be adjusted further by a region regressor. Backpropagation is used to train the model.

The authors used 50 video frames for training and four images for testing. When considering the results of the training of the cuttings-area detection, the loss of classification decreases and converges after approximately 1,800 training steps. The loss of localization undergoes growth at the beginning of the training but gradually decreases and converges at approximately 2,000 training steps.

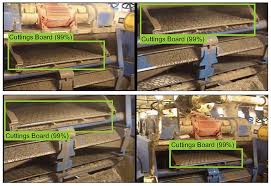

Fig. 1 illustrates the results of ROI detection. The bounding boxes contain the predicted region that covers the flow of cuttings. The machine predicts the correct region with high confidence. The success of implementing ROI detection brings the following benefits to the project:

- Automation of the attention mechanism

- Adaptation to different camera angles and distances

Randomized Subsampling Inside ROI. An ROI is selected either manually by the user at the beginning of the video stream or automatically by the cuttings-region detector on the basis of the faster R-CNN framework. However, the vibration or the wind might nudge the camera’s position and angle, which will compromise the classification performance if the system is trained without proper motion compensations. In this study, a randomized subsampling strategy is proposed by using a stack of small image patches to overcome this problem. Image patches are densely sampled from the ROI. Instead of using the entire ROI as the input to the DNN, a stack of image patches is fed to the DNN.

Principal-Component Analysis (PCA) Whitening Transformation. The PCA whitening transformation is applied to video frames immediately before they are fed into the DNN. The goal is to make the input less redundant. The PCA whitening transformation removes the underlying correlations among adjacent frames and potentially improves the convergence of the model.

Experiment and Performance Evaluation

To evaluate performance, the proposed method was tested on a live-video stream and the real-time classification results are compared with the manual annotation. On the basis of criteria used by rig engineers monitoring the return cuttings flow in real time, the cuttings volume was classified into four discrete levels: extra heavy, heavy, light, and none. Each video was labeled by four experts (the ground-truth labeling represents consensus among the experts). The testing results show that the system can handle the live-stream video without dropping frames. The proposed DNN successfully classifies all classes. The result shows that the proposed model achieves a significant performance boost compared with the performance of traditional networks.