Introduction

Data is the lifeblood of modern business, but raw data is like crude oil—it is useless until it is refined. For years, software developers had DevOps to speed up their work, while data teams remained stuck in slow, manual processes. This created a massive gap. Data engineering was slow, prone to errors, and difficult to scale. The DataOps Certified Professional (DOCP) program bridges this gap. It applies the best parts of DevOps—automation, collaboration, and continuous improvement—to the world of data. If you want to lead data teams or build high-speed data pipelines, this guide is for you. I have seen the industry shift from simple databases to massive AI-driven lakes, and I can tell you that DataOps is the most critical skill for the next decade.

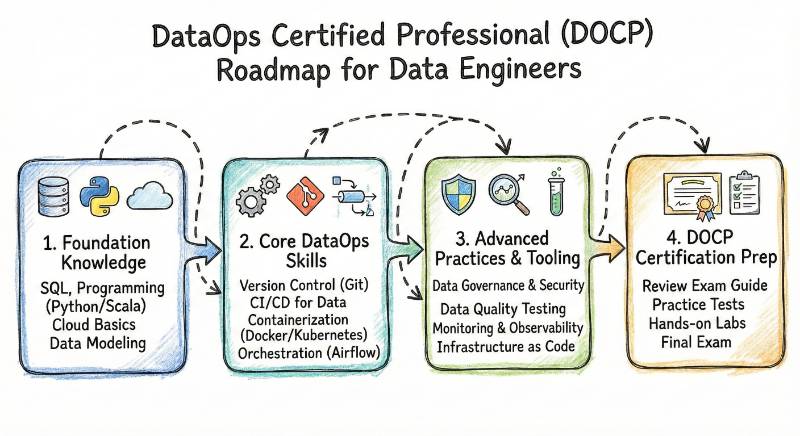

What is the DataOps Certified Professional (DOCP) Certification?

What it is:

The DataOps Certified Professional (DOCP) is an advanced certification designed to validate your ability to automate and streamline data workflows. It focuses on reducing the cycle time of data analytics while ensuring high data quality and security.

Who should take it:

This program is perfect for Data Engineers, Data Architects, Database Administrators, and DevOps Engineers who want to move into data-centric roles. Engineering Managers who oversee data platforms will also find this incredibly valuable for understanding team efficiency.

Skills you’ll gain

- Orchestration and Automation: You will learn how to automate complex data pipelines from ingestion to visualization.

- Data Quality Testing: You will master techniques to catch “bad data” before it hits your production dashboards.

- Version Control for Data: Learn how to treat your data infrastructure and code with the same rigor as software applications.

- CI/CD for Data: Implement continuous integration and delivery practices specifically for data environments.

- Collaboration Frameworks: Understand how to break down silos between data scientists, engineers, and business stakeholders.

- Monitoring and Observability: Gain the ability to track pipeline performance and data health in real-time.

Real-world projects you should be able to do after it

- Automated ETL Pipeline: Build a pipeline that automatically triggers, tests, and deploys data updates with zero manual intervention.

- Self-Service Data Platform: Create an environment where data scientists can spin up their own datasets without waiting for IT.

- Data Quality Dashboard: Design a system that alerts the team the moment a data source sends inconsistent or missing information.

- Environment Management: Use Infrastructure as Code (IaC) to recreate data environments for testing in minutes.

Preparation Plan

7–14 Days (The Fast Track):

If you already have a strong background in DevOps or Data Engineering, focus on the DataOps Manifesto and specific orchestration tools. Spend 2 hours daily reviewing automated testing for data and monitoring strategies.

30 Days (Standard Pace):

This is the most common path. Devote one week to each pillar: Data Quality, Orchestration, CI/CD for Data, and Organizational Culture. Practice with tools like Airflow or Jenkins to solidify your knowledge.

60 Days (Comprehensive Learning):

Ideal for beginners or those changing careers. Spend the first 30 days learning the basics of Cloud Data Warehousing and Git. Use the second 30 days to dive deep into the DOCP curriculum and build three small projects to test your skills.

Common Mistakes to Avoid in DataOps

Even with 20 years of observing shifts in the industry, I still see experienced teams fall into these traps. Transitioning to a DataOps mindset requires more than just new software; it requires a change in habits. Here are the most frequent mistakes to watch out for:

- Focusing Only on Tools: Many teams believe buying a new orchestration or cataloging tool will “fix” their data problems. Tools are useless if you don’t first fix your underlying processes and team culture.

- Ignoring Data Quality: There is a temptation to focus entirely on speed. However, delivering “dirty” or incorrect data faster is actually worse than delivering it late. Automated testing for data quality is non-negotiable.

- Resorting to Manual Fixes: When a pipeline breaks, it is tempting to manually patch the data to meet a deadline. This is a “technical debt” trap. If you don’t fix the underlying code, the error will inevitably return.

- Over-Engineering the Toolstack: Do not over-complicate your architecture early on. Start with simple, scalable automation and only add complex layers when the business case truly requires it.

- Neglecting Data Governance and Security: In the rush to make data accessible, teams often skip essential security protocols. DataOps must include “Security by Design” to protect sensitive information.

- Treating Data as Static: Unlike software code, data changes constantly. Ignoring “data drift”—where the source data structure or meaning shifts over time—will lead to silent pipeline failures.

- Failing to Involve End Users: Building a pipeline without consulting the analysts or data scientists who actually use the data leads to “perfect” pipelines that deliver the wrong insights.

- Skipping the Basics: You cannot master DataOps without a solid foundation in Linux, Git, and basic scripting. Skipping these fundamentals makes it nearly impossible to troubleshoot automated systems.

Best next certification after this

Once you have mastered DOCP, the best next step is AIOps Certified Professional. This allows you to apply your data pipeline skills to machine learning models, creating a seamless flow from raw data to AI-driven insights.

Master Certification Table

| Track | Level | Who it’s for | Prerequisites | Skills covered | Recommended order |

| DataOps | Professional | Engineers, Architects | Basic Data/DevOps knowledge | CI/CD, Data Quality, Orchestration | 1st in Data Track |

| DevOps | Foundation | Beginners, Developers | Basic Linux/Scripting | Docker, Jenkins, Git | 1st in DevOps Track |

| DevSecOps | Professional | Security Engineers | DevOps Basics | Security Automation, Compliance | After DevOps Professional |

| SRE | Expert | SREs, Ops Leads | Systems Admin | Error Budgets, Observability | After DevOps Professional |

| MLOps | Professional | Data Scientists | DataOps Basics | Model Deployment, Monitoring | After DataOps |

| FinOps | Associate | Managers, Cloud Leads | Cloud Basics | Cost Optimization, Finance | Any time |

Choose Your Path: 6 Learning Journeys

Selecting the right path is crucial for your career growth. Here is how you can align your interests with the right certification track.

1. The DevOps Path

This is the foundation for almost everything else. It is about breaking down the wall between developers and operations. You start by learning how to code, then how to containerize that code, and finally how to deploy it automatically. This path is for those who love speed and efficiency in software delivery.

2. The DevSecOps Path

In today’s world, security cannot be an afterthought. If you have a passion for “hacking” things for good, this is your route. You will learn how to inject security checks into the middle of the delivery pipeline so that vulnerabilities are caught before they ever reach a user.

3. The SRE (Site Reliability Engineering) Path

SRE is where software engineering meets systems administration. If you love solving puzzles and making systems “unbreakable,” this is for you. You will focus on high availability, performance, and building systems that can heal themselves when things go wrong.

4. The AIOps/MLOps Path

This is the frontier of technology. You will manage the lifecycle of Machine Learning models. Just like software needs a pipeline, AI models need a “factory” to be built, tested, and updated. It is a highly technical path that requires a mix of data science and systems engineering.

5. The DataOps Path

As we are discussing here, this path is for the data enthusiasts. It focuses on the “plumbing” of the data world. You ensure that the right data gets to the right person at the right time, with 100% accuracy. It is the backbone of any data-driven company.

6. The FinOps Path

Cloud costs can spiral out of control very quickly. FinOps is the practice of bringing financial accountability to the variable spend of the cloud. This path is excellent for those who have a mix of technical and business interests, focusing on efficiency and cost-savings.

Role → Recommended Certifications Mapping

| Current or Target Role | Primary Recommended Certification | Secondary/Specialized Certification |

| DevOps Engineer | Certified DevOps Professional (CDP) | DevSecOps Certified Professional (DSOCP) |

| Site Reliability Engineer | Site Reliability Engineering (SRE) | Chaos Engineering Certified |

| Data Engineer | DataOps Certified Professional (DOCP) | MLOps Certified Professional (MLOCP) |

| Cloud Engineer | Cloud Solutions Architect (AWS/Azure) | FinOps Certified Associate |

| Platform Engineer | Certified Kubernetes Administrator (CKA) | DevOps Professional |

| Security Engineer | DevSecOps Certified Professional | Certified Cloud Security Expert |

| FinOps Practitioner | FinOps Certified Associate | Cloud Cost Optimization Specialist |

| Engineering Manager | DevOps for Managers | DataOps Executive Overview |

Top Institutions for DataOps Training

Choosing the right partner for your certification journey makes a world of difference. Here are the top institutions that provide help in Training and Certifications for the DataOps Certified Professional (DOCP) program.

DevOpsSchool

DevOpsSchool is a premier global leader in technical training. They offer a deep-dive curriculum for DOCP that includes hands-on labs, real-world case studies, and mentorship from industry veterans. Their approach focuses on practical application rather than just theory, ensuring you are job-ready from day one.

Cotocus

Cotocus specializes in high-end technical consulting and training. Their DOCP program is known for its intensive “bootcamp” style, which is perfect for working professionals who need to gain skills quickly. They focus heavily on the latest tools like Airflow, DBT, and Snowflake integration.

Scmgalaxy

Scmgalaxy provides a vast library of resources and a community-driven approach to learning. Their training for DataOps is highly comprehensive, covering everything from the basics of version control to advanced data orchestration. It is an excellent choice for those who enjoy learning through community interaction.

BestDevOps

BestDevOps focuses on simplified learning paths for complex topics. Their DOCP training breaks down the DataOps manifesto into easy-to-understand modules. They are highly recommended for beginners or teams transitioning from traditional data management to modern DataOps.

DataOpsSchool

As a specialized institution, DataOpsSchool lives and breathes data. Their entire curriculum is built around the data lifecycle. Choosing them for your DOCP certification means you are learning from experts who focus exclusively on the challenges of data engineering and analytics.

Additional Specialized Institutions

- DevSecOpsSchool: Best if you want to integrate heavy security layers into your DataOps pipelines.

- SREschool: Great for learning how to apply reliability principles to big data platforms.

- AIOpsSchool: The perfect partner if your goal is to transition from DataOps into Machine Learning Operations.

- FinOpsSchool: Ideal for learning how to manage the high costs often associated with big data and cloud warehousing.

Next Certifications to Take

After completing your DOCP, you should consider these three directions:

- Same Track (Specialization): MLOps Certified Professional. Take your data pipelines to the next level by managing AI models.

- Cross-Track (Broadening Skills): Site Reliability Engineering (SRE). Learn how to keep your massive data platforms running with 99.99% uptime.

- Leadership (Career Growth): FinOps Certified Associate. Learn the business side of data so you can manage budgets and prove the ROI of your data initiatives.

Frequently Asked Questions (FAQs)

1. How difficult is the DOCP certification?

The DOCP is a professional-level certification. If you have a basic understanding of data and automation, you will find it challenging but manageable. It requires a mix of theoretical knowledge and practical skills in pipeline orchestration.

2. How much time do I need to prepare?

For most working engineers, 30 days of consistent study (about 5-7 hours a week) is sufficient. If you are new to the world of DevOps, you might want to dedicate 60 days to ensure you grasp the automation concepts.

3. Are there any prerequisites for DOCP?

There are no “hard” prerequisites, but we highly recommend having a basic understanding of SQL, Git, and at least one cloud platform (AWS, Azure, or GCP). Familiarity with Python or a scripting language is a big plus.

4. What is the sequence I should follow?

Start with the DataOps Foundation if you are a beginner. Move to the DOCP for your professional certification. After that, you can branch out into MLOps or SRE depending on your career goals.

5. What is the real value of this certification?

The value lies in the “expert” status it gives you. Companies are desperate for people who can fix their broken data processes. Having “DOCP” on your resume proves you have the framework to solve these high-value problems.

6. Will this certification help me get a salary hike?

Yes. Data Engineers with DataOps skills typically command 20-30% higher salaries than those who only know traditional ETL tools. It shifts you from a “worker” to an “architect” in the eyes of employers.

7. Can a manager take this course?

Absolutely. Engineering Managers and Product Owners benefit immensely. It helps them understand why data projects often get delayed and how to implement better processes to help their teams succeed.

8. Is the exam more theoretical or practical?

The exam is designed to test your ability to solve real-world problems. While there are multiple-choice questions, they are based on scenarios you would face in a daily job as a DataOps professional.

9. Does DOCP cover specific tools?

While DOCP is “tool-agnostic” (meaning it teaches principles), the training usually involves popular tools like Airflow, Jenkins, Docker, and various Cloud Data Warehouses to give you hands-on experience.

10. How long is the certification valid?

Like most high-end technical certifications, it is recommended to refresh your knowledge every 2-3 years as the data landscape evolves rapidly.

11. Can I take the exam online?

Yes, most providers offer a proctored online exam option, making it accessible to professionals globally, whether you are in India, the US, or Europe.

12. What makes DOCP different from a standard Data Engineering cert?

A standard cert teaches you how to move data. DOCP teaches you how to build a system that moves data automatically, reliably, and securely. It focuses on the process and the “Ops” side of things.

FAQs on DataOps Certified Professional (DOCP)

1. How does DOCP differ from a standard DevOps certification? While DevOps focuses on the software development lifecycle (apps and code), DOCP is specifically tailored to the Data Lifecycle. Data has unique challenges, such as data quality, schema changes, and massive volume, which software code does not face. This certification focuses on how to handle those data-specific hurdles using automation.

2. Is the DOCP exam performance-based or multiple-choice? The exam is a mix of scenario-based multiple-choice questions and practical application problems. You won’t just be asked for definitions; you will be presented with a failing data pipeline scenario and asked to identify the best DataOps-aligned solution to fix it.

3. What is the ROI for a company to sponsor an engineer for DOCP? For a manager, the ROI is clear: faster time-to-insight and lower operational costs. Teams with DOCP-certified members typically see a 60% reduction in manual data errors and can deploy new data products days—or even weeks—faster than traditional teams.

4. Can I take this certification if I only know SQL? SQL is a great start, but you will need to expand your horizons. The DOCP curriculum will introduce you to Git, CI/CD, and containerization (Docker). If you are willing to learn these “Ops” tools, your SQL background will provide the perfect context for the data you are automating.

5. How much weight does this certification carry for global roles? The DOCP is recognized globally as a benchmark for modern data engineering. Whether you are applying for a role in India, the US, or Europe, having this certification on your LinkedIn profile signals that you are aligned with the DataOps Manifesto, which is a worldwide standard.

6. Does the program cover “Data Observability”? Yes. A significant portion of the DOCP curriculum is dedicated to monitoring and observability. You will learn how to build dashboards that don’t just show if a pipeline is “up or down,” but whether the data flowing through it is accurate and trustworthy.

7. Is there a “Fast Track” option for experienced DevOps Engineers? Yes. If you already have a “Professional” level DevOps certification, you can often skip the foundational automation modules and focus entirely on the data-specific sections (Data Testing, Data Privacy, and Data Orchestration), allowing you to complete your prep in as little as 7–10 days.

8. Are there any hands-on projects required to pass? While the exam itself is a timed test, the recommended training institutions (like DevOpsSchool) require the completion of a Capstone Project to earn their completion certificate. This project usually involves building a full end-to-end automated data pipeline.

Testimonials

“Before the DOCP program, our data team was constantly fighting fires. We didn’t have a way to test our data quality. After implementing the DataOps principles I learned, our deployment speed tripled, and our errors dropped by half. It changed my career.”

— Arjun M., Senior Data Engineer

“I’ve been in IT for years, but the DOCP certification gave me a fresh perspective on how to handle the massive scale of modern data. The transition from DevOps to DataOps was seamless thanks to the structured learning path at DevOpsSchool.”

— Elena S., Infrastructure Architect

“As a manager, I struggled to understand why my data scientists were always waiting for data. This course showed me the bottlenecks in our pipeline and how to automate them. It is a must-have for any data-driven leader.”

— Sanjay K., Engineering Manager

Conclusion

The world does not need more data; it needs better ways to manage the data we already have. The DataOps Certified Professional (DOCP) is more than just a piece of paper. It is a mindset shift that allows you to treat data with the same speed and reliability as software code. Whether you are an engineer looking to increase your market value or a manager trying to build a high-performing team, the path to success starts with mastering these principles. The journey might seem long, but with the right training partner and a clear learning path, you can become a leader in this exciting field. Start today, and be the expert that your company—and the industry—needs.